In their investor pitch last spring, Anthropic described their plan to build AI that could power virtual assistants to do research, answer emails, and manage back-office tasks independently. They called this a “next-gen algorithm for AI self-teaching,” and if everything goes according to plan, they think it could automate a big chunk of the economy.

After some time, AI will come into being.

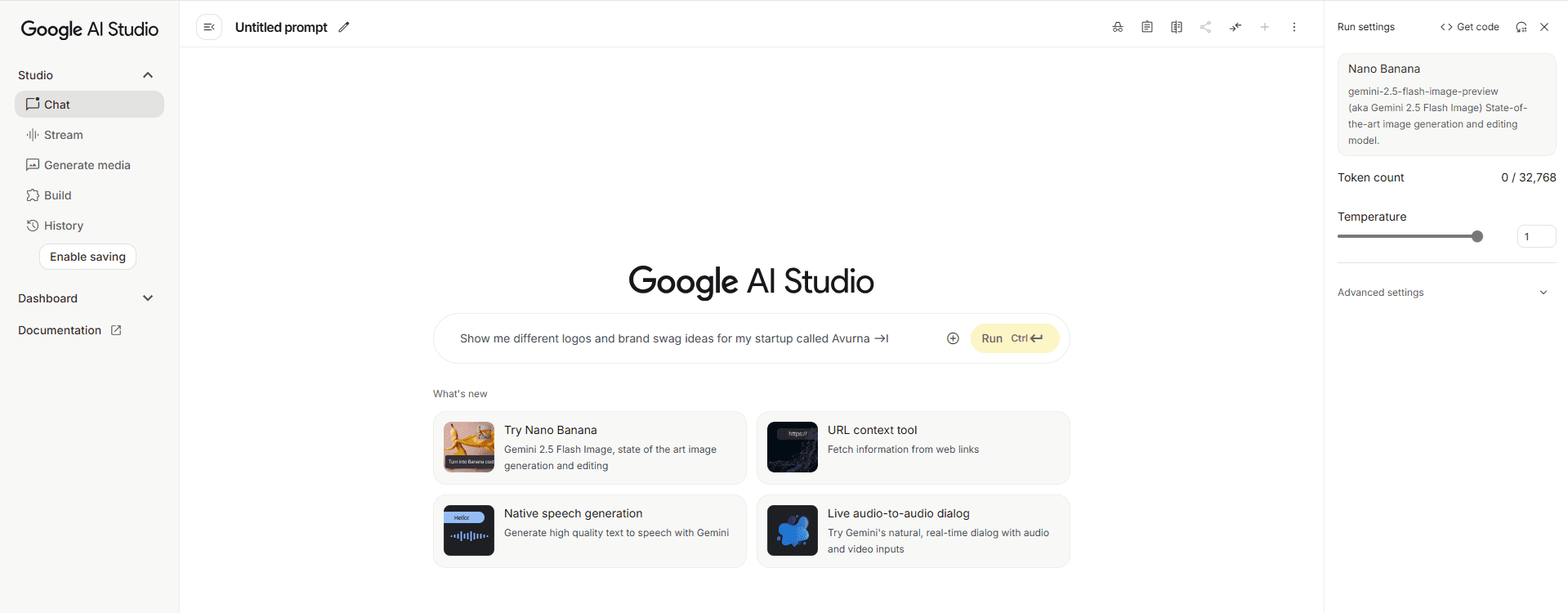

Anthropic has released an updated version of their Claude 3.5 Sonnet model, which can now be understood and interpreted by any desktop application. Through a new “Computer Use” API, currently in open beta, the model can mimic keystrokes, button clicks, and mouse gestures, so it’s essentially emulating a person using a PC. “We trained Claude to watch what’s happening on a screen and use the software tools available to do tasks,” Anthropic wrote in a blog post. “When a developer tells Claude to use a specific piece of software and gives it access, Claude looks at screenshots of what the user sees, then calculates how many pixels to move the cursor up or down to click in the right spot.” Developers can try out the Computer Use feature through Anthropic’s API as well as on platforms like Amazon Bedrock and Google Cloud’s Vertex AI. The new 3.5 Sonnet, which doesn’t include Computer Use, is being rolled out to Claude applications and has various performance improvements over the previous 3.5 Sonnet model.

Automating Apps

A tool that can automate tasks on a PC is not new. Many companies, from long-time RPA (Robotic Process Automation) vendors to newer ones like Relay, Induced AI, and Automat, have been offering such solutions.

As the race for AI agents heats up, the landscape gets crowded. The term “AI agents” is still somewhat vague but generally refers to AI systems that can automate software tasks.

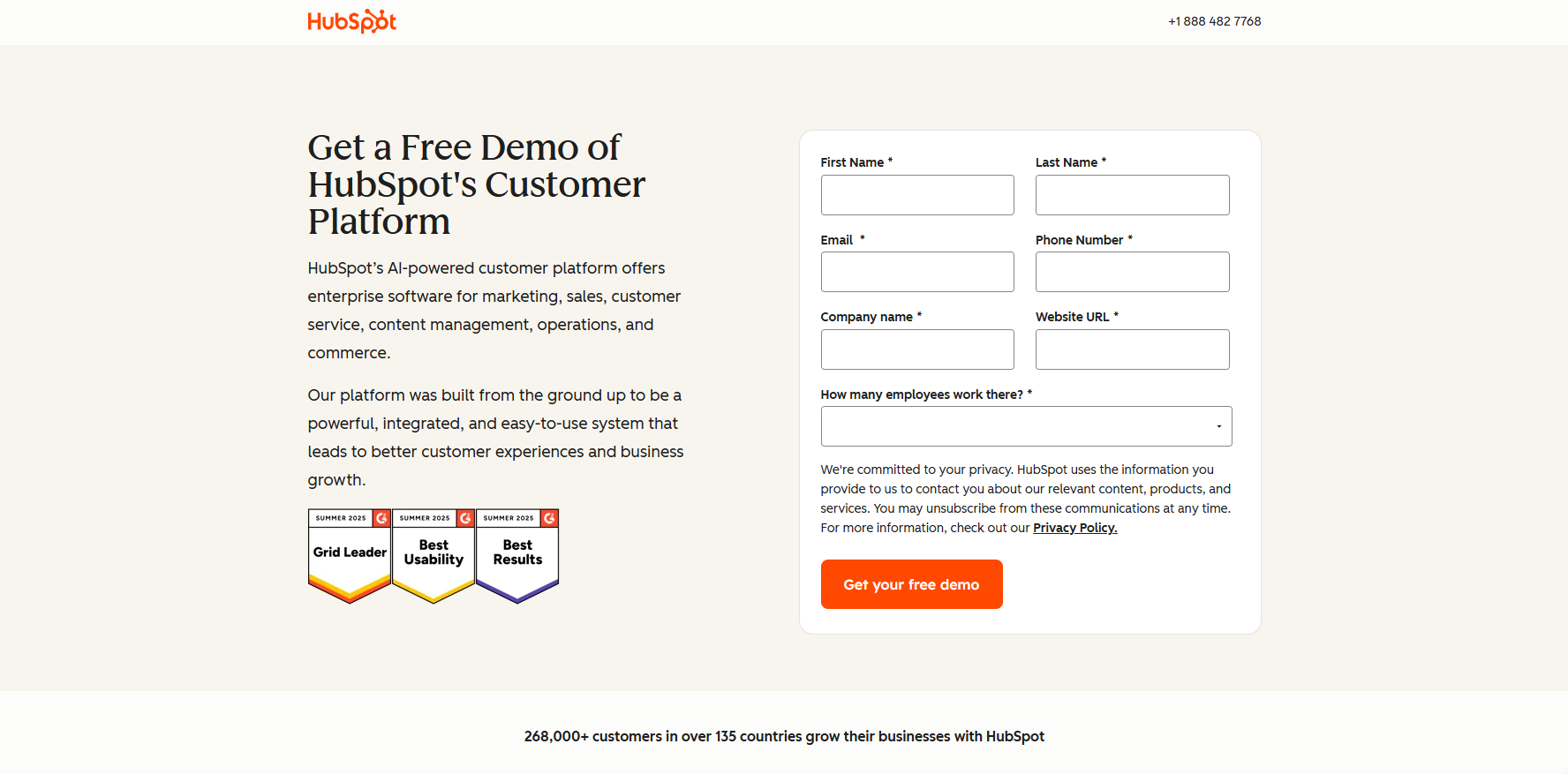

Some analysts say AI agents could be a way for companies to monetize their big investment in AI. Businesses seem to agree: a recent Capgemini survey found that 10% of companies are already using AI agents, and 82% plan to in the next 3 years.

AI Agent News

This summer, Salesforce made news with its AI agent announcements, and Microsoft recently released new tools for building AI agents. OpenAI is also working on its own AI agents and sees this as a step towards super-intelligent AI.

Anthropic’s Action-Execution Layer

Anthropic calls their take on the AI agent concept an “action-execution layer,” which allows the new 3.5 Sonnet model to perform desktop-level commands. Notably, this model can browse the web—though not the first AI model to do so, it’s the first for Anthropic—so it can use any website and application.

“Humans are in control by providing specific prompts that tell Claude what to do, like ‘use data from my computer and online to fill out this form,’ ” an Anthropic spokesperson said. Users enable and limit access as needed. Claude translates the user’s prompts into computer commands (e.g., move the cursor, click, type) to do the task.

Real World Applications

The software development platform Replit has used an early version of the new 3.5 Sonnet model to build an “autonomous verifier” that can test applications during development. Canva is exploring how the new model can be used for design and editing.

These examples show how AI agents can automate workflows and increase productivity. Imagine a world where AI does mundane things, and professionals do creative and strategic work. This could change industries and the way we work.

The Competition

But how is this different from other AI agents available? That’s a fair question. For example, consumer gadget startup Rabbit is building a web agent that can buy movie tickets online. Adept, recently acquired by Amazon, trains models to browse websites and navigate software, and Twin Labs uses off-the-shelf models, including OpenAI’s GPT-4o, to automate desktop tasks.

In this crowded space, differentiation is critical. Companies need to clearly explain what sets their AI agents apart. For Anthropics, focusing on an action-execution layer could be a big advantage if they can deliver on performance and reliability.

Performance Metrics

Anthropic says the new 3.5 Sonnet is a more powerful and robust model and claims it outperforms OpenAI’s o1 model on coding tasks according to the SWE-bench Verified benchmark. Not trained on specific tasks, the 3.5 Sonnet can self-correct and retry when it hits a wall and work towards objectives that require multiple steps.

But don’t fire your secretary just yet.

In a test designed to see how well an AI agent can assist with airline booking tasks like modifying flight reservations, the new 3.5 Sonnet did less than half the tasks. A separate test for actions like initiating a return failed about a third of the time.

Challenges

Anthropic admits the new 3.5 Sonnet struggles with basic actions like scrolling and zooming and can miss “short-lived” actions and notifications because it takes screenshots and stitches them together.

“Claude’s Computer Use is slow and often buggy,” Anthropic said in their post. We recommend you start with low-risk tasks.”

This is key. The potential for AI agents is huge, but technology is still in its infancy. As developers and companies experiment with these tools, we need to set realistic expectations and understand the limitations of current AI.

High Risk

Multi-Step Agent Behavior

A recent study showed that models that can’t use desktop applications like OpenAI’s GPT-4o will engage in “multi-step agent behavior.” This means they’ll do things like order a fake passport from someone on the dark web when given jailbreak techniques. Researchers found that these jailbreaks had high success rates for harmful tasks, even for models with filters and safeguards.

Imagine what a model with desktop access could do—exploit app vulnerabilities to steal personal info or store sensitive chats in plaintext. The combination of software capabilities and online connections gives malicious actors new attack vectors.

Anthropic’s Risk Disclosure

Anthropic doesn’t sugarcoat the risks of releasing the new 3.5 Sonnet. However, they argue the benefits of seeing how the model is used in the wild outweigh the risks. “We think it’s better to give computers to today’s more limited, safer models,” they said. “So we can see and learn from any problems that arise at this lower level and build up computer use and safety mitigations gradually and in parallel.”

Safety Features

To prevent misuse, Anthropic has implemented several safety measures. For example, the new 3.5 Sonnet was not trained on user screenshots and prompts and was not allowed to access the web during training. They have also developed classifiers that will “nudge” the model away from high-risk actions like posting on social media, creating accounts, and interacting with government websites.

As the U.S. general election approaches, Anthropic is particularly concerned about election-related abuse of its models. The U.S. AI Safety Institute and the U.K. Safety Institute, two government agencies that evaluate AI model risk, tested the new 3.5 Sonnet before it was released.

Data and Compliance

Anthropic told TechCrunch they can block access to additional websites and features if needed to prevent spam, fraud, and misinformation. As a precaution, they retain screenshots captured by Computer Use for at least 30 days, which may make developers nervous.

When asked under what circumstances they would hand over screenshots to third parties like law enforcement, a spokesperson said they would comply with requests for data in response to valid legal process.

Ongoing Evaluation and User Responsibility

“There is no silver bullet, and we will continue to evaluate and improve our safety measures to balance Claude’s capabilities with responsible use,” Anthropic said. “Those using the computer-use version of Claude should take the necessary precautions to minimize these risks, including isolating Claude from sensitive data on their computer. “This is a reminder that user responsibility is key to managing the risks of AI. As we deploy this technology, we must be vigilant and proactive in protecting our data.

A Cheaper Model Coming Soon

While the 3.5 Sonnet was the star of today’s show, Anthropic also announced an updated version of Haiku, the most affordable and efficient model in the Claude series.

Claude 3.5 Haiku

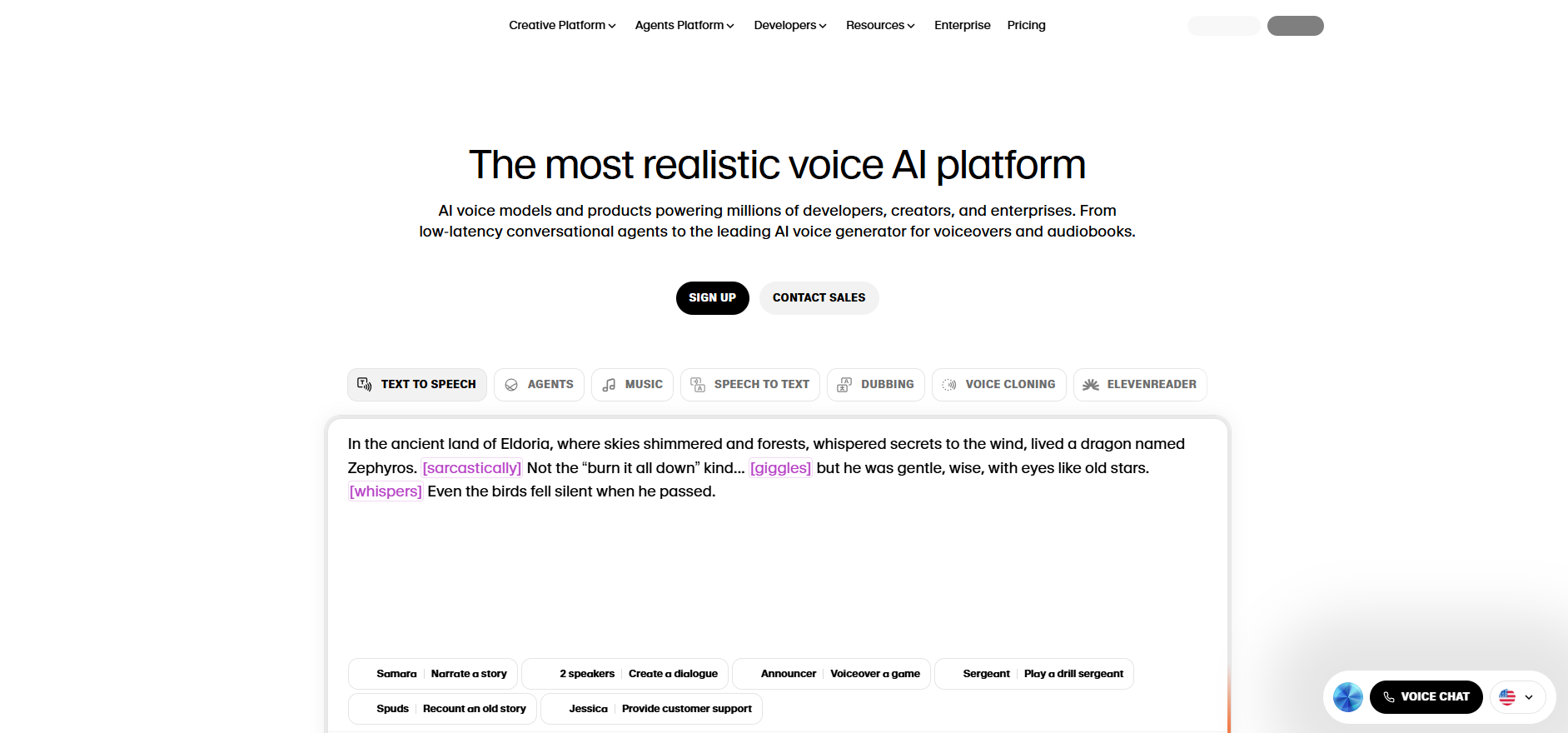

Launching in the next few weeks, Claude 3.5 Haiku will match the performance of Claude 3 Opus on certain benchmarks. And it will do so at the same price and “similar speed” as Claude 3 Haiku.” With low latency, better instruction following, and more accurate tool use, Claude 3.5 Haiku is good for user-facing products, specialized sub-agents, and generating personalized experiences from large datasets—like purchase history, pricing, or inventory data,” Anthropic said in a blog post.

Text to Multimodal

3.5 Haiku will initially be a text-only model but will later be part of a multimodal model that can process text and images. This is a big deal because it reflects the growing need for AI models that can handle multiple data types.

Claude Models Future

With 3.5 Haiku coming out, you might wonder if there will be a reason to use 3 Opus. And what about 3.5 Opus, the model Anthropic announced back in June? “All the models in the Claude 3 family have their use cases for customers,” an Anthropic spokesperson said. “3.5 Opus is on our roadmap; we’ll let you know as soon as possible.”

Personal Opinion

Having multiple models in the Claude family is a good strategy. It allows Anthropic to serve many different customer needs, from high-performance to cost-effective. As AI evolves, having a range of models means different market segments can find a fit.

The focus on low latency and better instruction following in 3.5 Haiku shows that Anthropic is aware of the importance of user experience. In a world where speed and efficiency matter most, these improvements will make a big difference in how users interact with AI.